AI Certification: New Project Explores How Artificial Intelligence Can Become Trustworthy

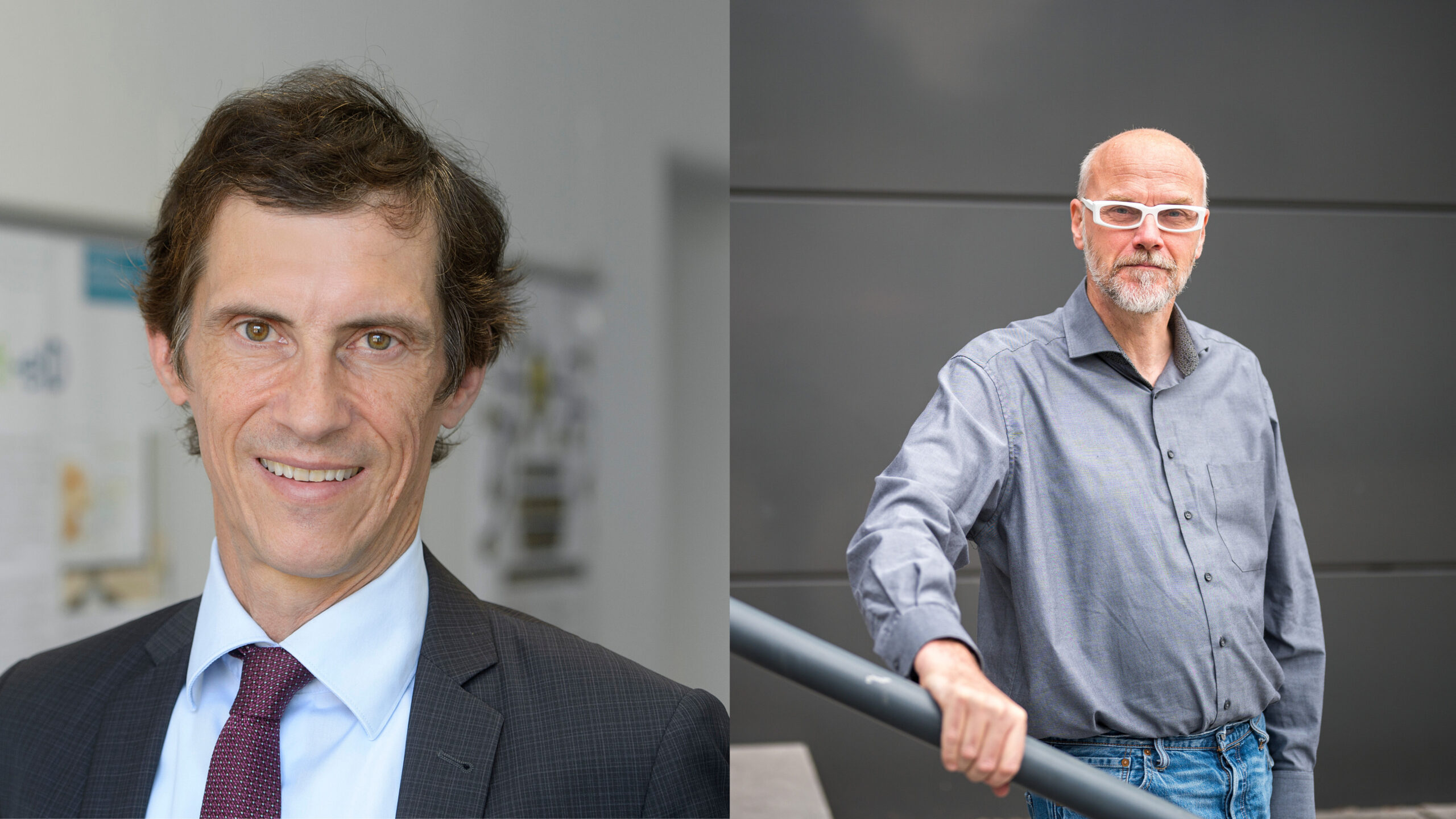

Left: Prof. Georg Borges, Executive Director of the Institute of Legal Informatics, Saarland University (Photo: Iris Maurer). Right: Prof. Holger Hermanns, Professor of Computer Science, Saarland University (Photo: UdS/Oliver Dietze).

How can the certification of AI systems help regulate Artificial Intelligence and build trust in the technology? This is the question being explored by German and Brazilian research teams. The project brings together research groups from Saarland University and the University of São Paulo, with funding from the German Research Foundation (DFG) and the Brazilian partner organization CAPES.

On August 1, the Institute of Legal Informatics will host an international workshop on AI certification.

Online participation is free of charge.

When an independent body formally certifies that certain requirements and standards are reliably met, it builds confidence. In their own interest, companies use certification to demonstrate that their products or services comply with high standards. Certification bodies such as TÜV, DEKRA, or Germany’s Federal Office for Information Security (BSI) assess and confirm quality and safety. Customers can rely on this assurance.

“AI systems can also gain trust and acceptance through certification,” says Professor Georg Borges, Director of the Institute of Legal Informatics at Saarland University.

The European Union’s Artificial Intelligence Act (AI Act), a milestone in the regulation of AI that came into force on August 1, 2024, requires so-called “conformity assessments” for high-risk AI systems. For AI systems that pose a significant risk to health, safety, or fundamental rights, the manufacturer is generally responsible for checking whether the systems meet the requirements and standards set out in applicable laws and regulations. In exceptional cases, the AI Act also requires certification by independent bodies. For non-high-risk AI systems, certification is voluntary.

“Artificial Intelligence holds great potential, but its risks create uncertainty. This is where our research project comes in. We examine AI certification as a regulatory instrument. Certification offers a promising way to address both the rapid pace of digital markets and the regulatory need for oversight and safety,” explains Georg Borges.

Together with the teams of computer science professor Holger Hermanns, also at Saarland University, and law professor Juliano Souza de Albuquerque Maranhão from the University of São Paulo, Borges—an expert in IT law and legal informatics—is analyzing the legal implications of AI certification in Europe, Germany, and Brazil.

The research project, titled Potential of AI System Certification for Regulating Artificial Intelligence, began in May 2025. The teams are assessing different types of certification and their regulatory frameworks.

“Certification can confirm that AI systems meet applicable standards, legal requirements, and other guidelines. It not only attests to their technical reliability, but also that they operate safely, transparently, and fairly, and handle data responsibly,” says Borges. “We are examining the key aspects that define responsible and trustworthy AI, as well as the consistency and adequacy of international standards.”

The goal of the project is to enable the safe use of AI systems across various economic sectors and areas of life.

“Certification also has the potential to develop internationally into a promising regulatory instrument—even in countries that reject state regulation. It serves not only the interests of users, who demand safe AI systems, but also the interests of AI providers, who can use it to prove the quality of their systems. In this way, certification can provide a strong incentive for compliance,” Borges notes.

How to approach a legal framework for Artificial Intelligence varies widely across the world. Europe has chosen regulation, while in Brazil, an AI law influenced by the EU AI Act is currently making its way through the legislative process. In contrast, there is ongoing debate in the United States about excluding AI from any state oversight.

The project, funded by the DFG and CAPES, builds on extensive research by Saarbrücken-based teams. For years, Georg Borges and Holger Hermanns have collaborated with colleagues from various disciplines at the Saarbrücken campus to make intelligent systems explainable and transparent. Their joint project Explainability of Intelligent Systems (EIS), funded by the Volkswagen Foundation, is part of the initiative Artificial Intelligence and Its Impact on Tomorrow’s Society.

On behalf of the German Federal Ministry for Economic Affairs, Borges has also developed a data protection certification for cloud services, along with a corresponding test standard, the Trusted Cloud Data Protection for Cloud Services (TCDP), and a related code of conduct.

As part of its annual International Summer School on IT Law and Legal Informatics (July 28 – August 6, 2025), the Institute of Legal Informatics will host a workshop on AI certification on Friday, August 1. The workshop is open to all interested participants. Experts will examine the advantages and challenges of certifying AI systems from an interdisciplinary perspective.

Further information, the full program, and the link for online participation are available on the Institute’s website:

https://www.rechtsinformatik.saarland

Contact:

Prof. Dr. Georg Borges:

Tel.: +49 681 302-3105

E-Mail: ls.borges(at)uni-saarland.de